#predictive analytics and python in business

Explore tagged Tumblr posts

Text

In today's data-driven world, businesses are constantly seeking innovative ways to leverage their vast amounts of data for competitive advantage.

0 notes

Text

Explore IGMPI’s Big Data Analytics program, designed for professionals seeking expertise in data-driven decision-making. Learn advanced analytics techniques, data mining, machine learning, and business intelligence tools to excel in the fast-evolving world of big data.

#Big Data Analytics#Data Science#Machine Learning#Predictive Analytics#Business Intelligence#Data Visualization#Data Mining#AI in Analytics#Big Data Tools#Data Engineering#IGMPI#Online Analytics Course#Data Management#Hadoop#Python for Data Science

0 notes

Text

Big Data vs. Traditional Data: Understanding the Differences and When to Use Python

In the evolving landscape of data science, understanding the nuances between big data and traditional data is crucial. Both play pivotal roles in analytics, but their characteristics, processing methods, and use cases differ significantly. Python, a powerful and versatile programming language, has become an indispensable tool for handling both types of data. This blog will explore the differences between big data and traditional data and explain when to use Python, emphasizing the importance of enrolling in a data science training program to master these skills.

What is Traditional Data?

Traditional data refers to structured data typically stored in relational databases and managed using SQL (Structured Query Language). This data is often transactional and includes records such as sales transactions, customer information, and inventory levels.

Characteristics of Traditional Data:

Structured Format: Traditional data is organized in a structured format, usually in rows and columns within relational databases.

Manageable Volume: The volume of traditional data is relatively small and manageable, often ranging from gigabytes to terabytes.

Fixed Schema: The schema, or structure, of traditional data is predefined and consistent, making it easy to query and analyze.

Use Cases of Traditional Data:

Transaction Processing: Traditional data is used for transaction processing in industries like finance and retail, where accurate and reliable records are essential.

Customer Relationship Management (CRM): Businesses use traditional data to manage customer relationships, track interactions, and analyze customer behavior.

Inventory Management: Traditional data is used to monitor and manage inventory levels, ensuring optimal stock levels and efficient supply chain operations.

What is Big Data?

Big data refers to extremely large and complex datasets that cannot be managed and processed using traditional database systems. It encompasses structured, unstructured, and semi-structured data from various sources, including social media, sensors, and log files.

Characteristics of Big Data:

Volume: Big data involves vast amounts of data, often measured in petabytes or exabytes.

Velocity: Big data is generated at high speed, requiring real-time or near-real-time processing.

Variety: Big data comes in diverse formats, including text, images, videos, and sensor data.

Veracity: Big data can be noisy and uncertain, requiring advanced techniques to ensure data quality and accuracy.

Use Cases of Big Data:

Predictive Analytics: Big data is used for predictive analytics in fields like healthcare, finance, and marketing, where it helps forecast trends and behaviors.

IoT (Internet of Things): Big data from IoT devices is used to monitor and analyze physical systems, such as smart cities, industrial machines, and connected vehicles.

Social Media Analysis: Big data from social media platforms is analyzed to understand user sentiments, trends, and behavior patterns.

Python: The Versatile Tool for Data Science

Python has emerged as the go-to programming language for data science due to its simplicity, versatility, and robust ecosystem of libraries and frameworks. Whether dealing with traditional data or big data, Python provides powerful tools and techniques to analyze and visualize data effectively.

Python for Traditional Data:

Pandas: The Pandas library in Python is ideal for handling traditional data. It offers data structures like DataFrames that facilitate easy manipulation, analysis, and visualization of structured data.

SQLAlchemy: Python's SQLAlchemy library provides a powerful toolkit for working with relational databases, allowing seamless integration with SQL databases for querying and data manipulation.

Python for Big Data:

PySpark: PySpark, the Python API for Apache Spark, is designed for big data processing. It enables distributed computing and parallel processing, making it suitable for handling large-scale datasets.

Dask: Dask is a flexible parallel computing library in Python that scales from single machines to large clusters, making it an excellent choice for big data analytics.

When to Use Python for Data Science

Understanding when to use Python for different types of data is crucial for effective data analysis and decision-making.

Traditional Data:

Business Analytics: Use Python for traditional data analytics in business scenarios, such as sales forecasting, customer segmentation, and financial analysis. Python's libraries, like Pandas and Matplotlib, offer comprehensive tools for these tasks.

Data Cleaning and Transformation: Python is highly effective for data cleaning and transformation, ensuring that traditional data is accurate, consistent, and ready for analysis.

Big Data:

Real-Time Analytics: When dealing with real-time data streams from IoT devices or social media platforms, Python's integration with big data frameworks like Apache Spark enables efficient processing and analysis.

Large-Scale Machine Learning: For large-scale machine learning projects, Python's compatibility with libraries like TensorFlow and PyTorch, combined with big data processing tools, makes it an ideal choice.

The Importance of Data Science Training Programs

To effectively navigate the complexities of both traditional data and big data, it is essential to acquire the right skills and knowledge. Data science training programs provide comprehensive education and hands-on experience in data science tools and techniques.

Comprehensive Curriculum: Data science training programs cover a wide range of topics, including data analysis, machine learning, big data processing, and data visualization, ensuring a well-rounded education.

Practical Experience: These programs emphasize practical learning through projects and case studies, allowing students to apply theoretical knowledge to real-world scenarios.

Expert Guidance: Experienced instructors and industry mentors offer valuable insights and support, helping students master the complexities of data science.

Career Opportunities: Graduates of data science training programs are in high demand across various industries, with opportunities to work on innovative projects and drive data-driven decision-making.

Conclusion

Understanding the differences between big data and traditional data is fundamental for any aspiring data scientist. While traditional data is structured, manageable, and used for transaction processing, big data is vast, varied, and requires advanced tools for real-time processing and analysis. Python, with its robust ecosystem of libraries and frameworks, is an indispensable tool for handling both types of data effectively.

Enrolling in a data science training program equips you with the skills and knowledge needed to navigate the complexities of data science. Whether you're working with traditional data or big data, mastering Python and other data science tools will enable you to extract valuable insights and drive innovation in your field. Start your journey today and unlock the potential of data science with a comprehensive training program.

#Big Data#Traditional Data#Data Science#Python Programming#Data Analysis#Machine Learning#Predictive Analytics#Data Science Training Program#SQL#Data Visualization#Business Analytics#Real-Time Analytics#IoT Data#Data Transformation

0 notes

Text

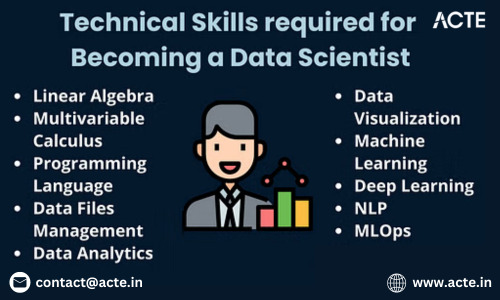

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

India’s Tech Sector to Create 1.2 Lakh AI Job Vacancies in Two Years

India’s technology sector is set to experience a hiring boom with job vacancies for artificial intelligence (AI) roles projected to reach 1.2 lakh over the next two years. As the demand for AI latest technology increases across industries, companies are rapidly adopting advanced tools to stay competitive. These new roles will span across tech services, Global Capability Centres (GCCs), pure-play AI and analytics firms, startups, and product companies.

Following a slowdown in tech hiring, the focus is shifting toward the development of AI. Market analysts estimate that Indian companies are moving beyond Proof of Concept (PoC) and deploying large-scale AI systems, generating high demand for roles such as AI researchers, product managers, and data application specialists. “We foresee about 120,000 to 150,000 AI-related job vacancies emerging as Indian IT services ramp up AI applications,” noted Gaurav Vasu, CEO of UnearthInsight.

India currently has 4 lakh AI professionals, but the gap between demand and supply is widening, with job requirements expected to reach 6 lakh soon. By 2026, experts predict the number of AI specialists required will hit 1 million, reflecting the deep integration of AI latest technology into industries like healthcare, e-commerce, and manufacturing.

The transition to AI-driven operations is also altering the nature of job vacancies. Unlike traditional software engineering roles, artificial intelligence positions focus on advanced algorithms, automation, and machine learning. Companies are recruiting experts in fields like deep learning, robotics, and natural language processing to meet the growing demand for innovative AI solutions. The development of AI has led to the rise of specialised roles such as Machine Learning Engineers, Data Scientists, and Prompt Engineers.

Krishna Vij, Vice President of TeamLease Digital, remarked that new AI roles are evolving across industries as AI latest technology becomes an essential tool for product development, operations, and consulting. “We expect close to 120,000 new job vacancies in AI across different sectors like finance, healthcare, and autonomous systems,” he said.

AI professionals also enjoy higher compensation compared to their traditional tech counterparts. Around 80% of AI-related job vacancies offer premium salaries, with packages 40%-80% higher due to the limited pool of trained talent. “The low availability of experienced AI professionals ensures that artificial intelligence roles will command attractive pay for the next 2-3 years,” noted Krishna Gautam, Business Head of Xpheno.

Candidates aiming for AI roles need to master key competencies. Proficiency in programming languages like Python, R, Java, or C++ is essential, along with knowledge of AI latest technology such as large language models (LLMs). Expertise in statistics, machine learning algorithms, and cloud computing platforms adds value to applicants. As companies adopt AI latest technology across domains, candidates with critical thinking and AI adaptability will stay ahead so it is important to learn and stay updated with AI informative blogs & news.

Although companies are prioritising experienced professionals for mid-to-senior roles, entry-level job vacancies are also rising, driven by the increased use of AI in enterprises. Bootcamps, certifications, and academic programs are helping freshers gain the skills required for artificial intelligence roles. As AI development progresses, entry-level roles are expected to expand in the near future. AI is reshaping the industries providing automation & the techniques to save time , to increase work efficiency.

India’s tech sector is entering a transformative phase, with a surge in job vacancies linked to AI latest technology adoption. The next two years will witness fierce competition for AI talent, reshaping hiring trends across industries and unlocking new growth opportunities in artificial intelligence. Both startups and established companies are racing to secure talent, fostering a dynamic landscape where artificial intelligence expertise will be help in innovation and growth. AI will help organizations and businesses to actively participate in new trends.

#aionlinemoney.com

2 notes

·

View notes

Text

Building Blocks of Data Science: What You Need to Succeed

Embarking on a journey in data science is a thrilling endeavor that requires a combination of education, skills, and an insatiable curiosity. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. Whether you're a seasoned professional or a newcomer to the field, here's a comprehensive guide to what is required to study data science.

1. Educational Background: Building a Solid Foundation

A strong educational foundation is the bedrock of a successful data science career. Mastery of mathematics and statistics, encompassing algebra, calculus, probability, and descriptive statistics, lays the groundwork for advanced data analysis. While a bachelor's degree in computer science, engineering, mathematics, or statistics is advantageous, data science is a field that welcomes individuals with diverse educational backgrounds.

2. Programming Skills: The Language of Data

Proficiency in programming languages is a non-negotiable skill for data scientists. Python and R stand out as the languages of choice in the data science community. Online platforms provide interactive courses, making the learning process engaging and effective.

3. Data Manipulation and Analysis: Unraveling Insights

The ability to manipulate and analyze data is at the core of data science. Familiarity with data manipulation libraries, such as Pandas in Python, is indispensable. Understanding how to clean, preprocess, and derive insights from data is a fundamental skill.

4. Database Knowledge: Harnessing Data Sources

Basic knowledge of databases and SQL is beneficial. Data scientists often need to extract and manipulate data from databases, making this skill essential for effective data handling.

5. Machine Learning Fundamentals: Unlocking Predictive Power

A foundational understanding of machine learning concepts is key. Online courses and textbooks cover supervised and unsupervised learning, various algorithms, and methods for evaluating model performance.

6. Data Visualization: Communicating Insights Effectively

Proficiency in data visualization tools like Matplotlib, Seaborn, or Tableau is valuable. The ability to convey complex findings through visuals is crucial for effective communication in data science.

7. Domain Knowledge: Bridging Business and Data

Depending on the industry, having domain-specific knowledge is advantageous. This knowledge helps data scientists contextualize their findings and make informed decisions from a business perspective.

8. Critical Thinking and Problem-Solving: The Heart of Data Science

Data scientists are, at their core, problem solvers. Developing critical thinking skills is essential for approaching problems analytically and deriving meaningful insights from data.

9. Continuous Learning: Navigating the Dynamic Landscape

The field of data science is dynamic, with new tools and techniques emerging regularly. A commitment to continuous learning and staying updated on industry trends is vital for remaining relevant in this ever-evolving field.

10. Communication Skills: Bridging the Gap

Strong communication skills, both written and verbal, are imperative for data scientists. The ability to convey complex technical findings in a comprehensible manner is crucial, especially when presenting insights to non-technical stakeholders.

11. Networking and Community Engagement: A Supportive Ecosystem

Engaging with the data science community is a valuable aspect of the learning process. Attend meetups, participate in online forums, and connect with experienced practitioners to gain insights, support, and networking opportunities.

12. Hands-On Projects: Applying Theoretical Knowledge

Application of theoretical knowledge through hands-on projects is a cornerstone of mastering data science. Building a portfolio of projects showcases practical skills and provides tangible evidence of your capabilities to potential employers.

In conclusion, the journey in data science is unique for each individual. Tailor your learning path based on your strengths, interests, and career goals. Continuous practice, real-world application, and a passion for solving complex problems will pave the way to success in the dynamic and ever-evolving field of data science. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Cracking the Code: Explore the World of Big Data Analytics

Welcome to the amazing world of Big Data Analytics! In this comprehensive course, we will delve into the key components and complexities of this rapidly growing field. So, strap in and get ready to embark on a journey that will equip you with the essential knowledge and skills to excel in the realm of Big Data Analytics.

Key Components

Understanding Big Data

What is big data and why is it so significant in today's digital landscape?

Exploring the three dimensions of big data: volume, velocity, and variety.

Overview of the challenges and opportunities associated with managing and analyzing massive datasets.

Data Analytics Techniques

Introduction to various data analytics techniques, such as descriptive, predictive, and prescriptive analytics.

Unraveling the mysteries behind statistical analysis, data visualization, and pattern recognition.

Hands-on experience with popular analytics tools like Python, R, and SQL.

Machine Learning and Artificial Intelligence

Unleashing the potential of machine learning algorithms in extracting insights and making predictions from data.

Understanding the fundamentals of artificial intelligence and its role in automating data analytics processes.

Applications of machine learning and AI in real-world scenarios across various industries.

Reasons to Choose the Course

Comprehensive Curriculum

An in-depth curriculum designed to cover all facets of Big Data Analytics.

From the basics to advanced topics, we leave no stone unturned in building your expertise.

Practical exercises and real-world case studies to reinforce your learning experience.

Expert Instructors

Learn from industry experts who possess a wealth of experience in big data analytics.

Gain insights from their practical knowledge and benefit from their guidance and mentorship.

Industry-relevant examples and scenarios shared by the instructors to enhance your understanding.

Hands-on Approach

Dive into the world of big data analytics through hands-on exercises and projects.

Apply the concepts you learn to solve real-world data problems and gain invaluable practical skills.

Work with real datasets to get a taste of what it's like to be a professional in the field.

Placement Opportunities

Industry Demands and Prospects

Discover the ever-increasing demand for skilled big data professionals across industries.

Explore the vast range of career opportunities in data analytics, including data scientist, data engineer, and business intelligence analyst.

Understand how our comprehensive course can enhance your prospects of securing a job in this booming field.

Internship and Job Placement Assistance

By enrolling in our course, you gain access to internship and job placement assistance.

Benefit from our extensive network of industry connections to get your foot in the door.

Leverage our guidance and support in crafting a compelling resume and preparing for interviews.

Education and Duration

Mode of Learning

Choose between online, offline, or blended learning options to cater to your preferences and schedule.

Seamlessly access learning materials, lectures, and assignments through our user-friendly online platform.

Engage in interactive discussions and collaborations with instructors and fellow students.

Duration and Flexibility

Our course is designed to be flexible, allowing you to learn at your own pace.

Depending on your dedication and time commitment, you can complete the course in as little as six months.

Benefit from lifetime access to course materials and updates, ensuring your skills stay up-to-date.

By embarking on this comprehensive course at ACTE institute, you will unlock the door to the captivating world of Big Data Analytics. With a solid foundation in the key components, hands-on experience, and placement opportunities, you will be equipped to seize the vast career prospects that await you. So, take the leap and join us on this exciting journey as we unravel the mysteries and complexities of Big Data Analytics.

5 notes

·

View notes

Text

Business Analytics vs. Data Science: Understanding the Key Differences

In today's data-driven world, terms like "business analytics" and "data science" are often used interchangeably. However, while they share a common goal of extracting insights from data, they are distinct fields with different focuses and methodologies. Let's break down the key differences to help you understand which path might be right for you.

Business Analytics: Focusing on the Present and Past

Business analytics primarily focuses on analyzing historical data to understand past performance and inform current business decisions. It aims to answer questions like:

What happened?

Why did it happen?

What is happening now?

Key characteristics of business analytics:

Descriptive and Diagnostic: It uses techniques like reporting, dashboards, and data visualization to summarize and explain past trends.

Structured Data: It often works with structured data from databases and spreadsheets.

Business Domain Expertise: A strong understanding of the specific business domain is crucial.

Tools: Business analysts typically use tools like Excel, SQL, Tableau, and Power BI.

Focus: Optimizing current business operations and improving efficiency.

Data Science: Predicting the Future and Building Models

Data science, on the other hand, focuses on building predictive models and developing algorithms to forecast future outcomes. It aims to answer questions like:

What will happen?

How can we make it happen?

Key characteristics of data science:

Predictive and Prescriptive: It uses machine learning, statistical modeling, and AI to predict future trends and prescribe optimal actions.

Unstructured and Structured Data: It can handle both structured and unstructured data from various sources.

Technical Proficiency: Strong programming skills (Python, R) and a deep understanding of machine learning algorithms are essential.

Tools: Data scientists use programming languages, machine learning libraries, and big data technologies.

Focus: Developing innovative solutions, building AI-powered products, and driving long-term strategic initiatives.

Key Differences Summarized:

Which Path is Right for You?

Choose Business Analytics if:

You are interested in analyzing past data to improve current business operations.

You have a strong understanding of a specific business domain.

You prefer working with structured data and using visualization tools.

Choose Data Science if:

You are passionate about building predictive models and developing AI-powered solutions.

You have a strong interest in programming and machine learning.

You enjoy working with both structured and unstructured data.

Xaltius Academy's Data Science & AI Course:

If you're leaning towards data science and want to delve into machine learning and AI, Xaltius Academy's Data Science & AI course is an excellent choice. This program equips you with the necessary skills and knowledge to become a proficient data scientist, covering essential topics like:

Python programming

Machine learning algorithms

Data visualization

And much more!

By understanding the distinct roles of business analytics and data science, you can make an informed decision about your career path and leverage the power of data to drive success.

2 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

4 notes

·

View notes

Text

Business Analytics Training in Mumbai: A Gateway to Lucrative Career Opportunities

In today’s competitive and data-driven world, the ability to make informed business decisions using analytical tools is a highly sought-after skill. One of the most dynamic and rapidly growing fields that harness the power of data is business analytics. As industries increasingly rely on data to optimize performance, reduce costs, and improve customer satisfaction, professionals with business analytics expertise are in high demand. Among the cities leading this educational transformation in India is Mumbai, the financial capital of the country. Business analytics training in Mumbai offers an excellent opportunity for students, working professionals, and career changers to gain the skills required to thrive in a data-centric environment.

Mumbai is home to a vast number of multinational corporations, financial institutions, IT companies, and startups, all of which generate massive amounts of data on a daily basis. This makes the city an ideal location for pursuing business analytics training. Training institutes in Mumbai provide comprehensive programs that are designed to meet the evolving needs of the industry. These programs usually cover essential topics such as data mining, statistical analysis, predictive modeling, data visualization, and the use of tools like R, Python, SQL, Excel, Power BI, and Tableau. Many of these courses also include practical projects, case studies, and real-world scenarios to give learners hands-on experience and confidence in applying analytical methods to solve business problems.

One of the key advantages of business analytics training in Mumbai is access to experienced faculty members and industry professionals. These experts bring a wealth of knowledge and real-time industry insights to the classroom, enabling students to bridge the gap between theoretical learning and practical application. Furthermore, many training centers in Mumbai collaborate with top companies to provide internships, live projects, and placement assistance, giving learners a competitive edge in the job market. Whether it’s full-time training, part-time evening classes, or online learning options, Mumbai offers flexible learning modes to cater to different needs and schedules.

The demand for skilled analytics professionals continues to grow across various sectors such as banking, finance, healthcare, e-commerce, retail, and logistics. Roles like Business Analyst, Data Analyst, Data Scientist, and Analytics Consultant are not only high-paying but also come with strong career progression opportunities. By enrolling inᅠbusiness analytics training in Mumbai, individuals can significantly enhance their employability and open the door to global career paths.

In conclusion, business analytics is not just a trend—it is a fundamental shift in how businesses operate and make decisions. As the reliance on data continues to increase, acquiring the right analytical skills has become essential for anyone looking to stay relevant in today’s job market. With its robust infrastructure, industry connections, and quality educational offerings, business analytics training in Mumbai serves as a gateway to a promising and future-proof career.

0 notes

Text

The Role of AI in Due Diligence: Transforming M&A and Private Equity in 2025

Due diligence has always been a cornerstone of mergers, acquisitions (M&A), and private equity deals. But in 2025, it's no longer just a manual deep dive into spreadsheets and legal documents. Artificial Intelligence (AI) is revolutionizing how investment bankers and dealmakers assess companies—making the process faster, smarter, and far more efficient.

If you're looking to enter this fast-evolving domain, now is the time to upskill with a hands-on, industry-relevant investment banking course in Hyderabad that integrates AI-driven financial analysis and modern dealmaking tools.

🔍 What is Due Diligence—and Why AI Matters

Due diligence is the process of investigating a business before finalizing a deal. It includes reviewing:

Financial records

Legal contracts

Operational data

Regulatory compliance

Intellectual property and more

Traditionally, this process is tedious, time-consuming, and prone to human oversight. That’s where AI comes in—augmenting human decision-making with speed, scale, and precision.

🚀 How AI is Transforming Due Diligence in 2025

1. Automated Document Review

AI tools now scan thousands of contracts, invoices, and legal files in seconds—flagging inconsistencies, missing clauses, and risky terms. Natural Language Processing (NLP) is used to read and interpret complex documents.

🛠️ Example: JP Morgan's COiN platform reviews legal documents 360,000 hours faster than humans.

2. Real-Time Financial Analysis

AI-powered platforms analyze balance sheets, P&L statements, cash flow trends, and debt positions automatically. They generate risk scores and even predict future performance.

These tools can:

Identify anomalies in financials

Benchmark performance against peers

Highlight hidden liabilities or weak revenue streams

3. Compliance and Regulatory Red Flag Detection

AI can monitor local and global regulatory frameworks in real-time, ensuring the target company is compliant. This is especially useful in cross-border M&A where laws differ by region.

4. Cybersecurity & ESG Due Diligence

AI is now used to assess a target firm’s cybersecurity resilience and Environmental, Social, and Governance (ESG) compliance—two non-financial risks that have become deal-breakers in 2025.

5. Predictive Analytics for Deal Success

AI systems can analyze historical deal outcomes, market trends, and company performance to predict the likelihood of a successful acquisition—helping investors and banks make more informed decisions.

💼 AI in Indian Investment Banking and PE

India's deal ecosystem is evolving fast, and leading players are already adopting AI:

ICICI Securities and Kotak Investment Banking use analytics platforms to speed up deal evaluations.

Indian private equity firms are integrating AI-based scoring systems to assess startup scalability and founder credibility.

SEBI is encouraging fintech adoption in compliance and financial analysis.

With Hyderabad emerging as a fintech and analytics hub, professionals with hybrid skills in finance and AI are in high demand.

🎓 Why You Should Consider an Investment Banking Course in Hyderabad

Hyderabad is not just a tech city—it’s fast becoming a financial intelligence center, thanks to its booming IT sector, presence of global banks, and access to talent.

A modern investment banking course in Hyderabad will help you:

Learn how AI is applied in financial modeling and risk assessment

Use tools like Python, Power BI, and Excel with automation

Understand the role of AI in M&A, IPOs, private equity, and venture capital

Analyze real-world case studies of AI-led transactions

Stay ahead of compliance and regulatory trends powered by AI

By combining investment banking fundamentals with hands-on AI exposure, such a course prepares you for the next generation of roles in global finance.

🧠 Career Roles Emerging from AI-Driven Due Diligence

As AI continues to dominate due diligence processes, the following roles are gaining traction:

AI-Enabled M&A Analyst

Digital Due Diligence Associate

Transaction Risk Specialist

Compliance Automation Executive

Financial Data Scientist

ESG Analyst with AI Expertise

Firms are now hiring professionals who can blend finance, analytics, and technology—and the right training is your gateway in.

✅ Final Thoughts

AI is not replacing investment bankers—but it is amplifying their impact. In due diligence, it transforms long hours into instant insights, empowers smarter decisions, and minimizes risks that could derail multimillion-dollar deals.

To succeed in this AI-powered future, you need more than just Excel skills. You need a deep understanding of how AI integrates with finance—and the practical experience to apply it.

Enrolling in a cutting-edge investment banking course in Hyderabad is the first step to becoming a next-gen dealmaker equipped for the age of intelligent finance.

0 notes

Text

Python for Data Science: What You Need to Know

Data is at the heart of every modern business decision, and Python is the tool that helps professionals make sense of it. Whether you're analyzing trends, building predictive models, or cleaning datasets, Python offers the simplicity and power needed to get the job done. If you're aiming for a career in this high-demand field, enrolling in the best python training in Hyderabad can help you master the language and its data science applications effectively.

Why Python is Perfect for Data Science

The Python programming language has become the language of choice for data science, and for good reason.. It’s easy to learn, highly readable, and has a massive community supporting it. Whether you’re a beginner or someone with a non-technical background, Python’s clean syntax allows you to focus more on problem-solving rather than worrying about complex code structures.

Must-Know Python Libraries for Data Science

To work efficiently in data science, you’ll need to get comfortable with several powerful Python libraries:

NumPy – Calculations and array operations based on numerical data.

Pandas – for working with structured data like tables and CSV files.

For creating charts and visualizing data patterns, use Matplotlib and Seaborn.

Scikit-learn – for implementing machine learning algorithms.

TensorFlow or PyTorch – for deep learning projects.

Data science workflows depend on these libraries and are essential to success.

Core Skills Every Data Scientist Needs

Learning Python is just the beginning. A successful data scientist also needs to:

Clean and prepare raw data (data wrangling).

Analyze data using statistics and visualizations.

Build, train, and test machine learning models.

Communicate findings through clear reports and dashboards.

Practicing these skills on real-world datasets will help you gain practical experience that employers value.

How to Get Started the Right Way

There are countless tutorials online, but a structured training program gives you a clearer path to success. The right course will cover everything from Python basics to advanced machine learning, including projects, assignments, and mentor support. This kind of guided learning builds both your confidence and your portfolio.

Conclusion: Learn Python for Data Science at SSSIT

Python is the backbone of data science, and knowing how to use it can unlock exciting career opportunities in AI, analytics, and more. You don't have to figure everything out on your own. Join a professional course that offers step-by-step learning, real-time projects, and expert mentoring. For a future-proof start, enroll at SSSIT Computer Education, known for offering the best python training in Hyderabad. Your data science journey starts here!

#best python training in hyderabad#best python training in kukatpally#best python training in KPHB#Best python training institute in Hyderabad

0 notes

Text

Why Dehradun Is Ideal for Analyst Career Growth

Nestled in the foothills of the Himalayas, Dehradun is often celebrated for its scenic beauty, quality education, and peaceful lifestyle. However, in recent years, the city has also carved a place for itself in India’s growing tech and analytics landscape. As startups, healthcare innovators, and educational institutions embrace digital transformation, Dehradun is gradually becoming an attractive destination for aspiring data analysts.

Whether you're a student stepping into the professional world or a working professional seeking a career pivot, Dehradun offers a unique blend of opportunities, learning infrastructure, and work-life balance—making it a promising city for career growth in analytics. Enrolling in a structured data analyst course in Dehradun is often the first step toward unlocking these local and national career opportunities.

A Growing Analytics Ecosystem

Dehradun’s rise in the analytics domain is no coincidence. With the expansion of IT-enabled services, healthcare startups, and digital platforms in sectors like education and tourism, data has become central to how businesses in the city operate and grow. This demand has created space for analytics professionals who can interpret, organize, and present data to inform decision-making.

What makes this city particularly attractive is its hybrid environment—a mix of calm surroundings and budding corporate innovation. While metro cities often bring intense competition and overwhelming pressure, Dehradun provides a focused atmosphere where one can upskill and grow sustainably. If you're considering a data analyst offline course, Dehradun offers a conducive setting for distraction-free learning.

Educational Strengths and Local Talent Pool

Dehradun has long been known as an academic center, home to prestigious schools and universities. This educational legacy has laid the foundation for a strong local talent pool that is naturally inclined toward analytical thinking and structured learning. The presence of renowned institutions also ensures an intellectual environment that supports skill development beyond textbooks.

For those aiming to gain an edge in the job market, investing in a data analyst course in Dehradun can be transformative. The right training not only sharpens your skills in tools like Excel, SQL, Python, and Power BI but also builds the critical thinking and storytelling abilities essential for real-world data interpretation.

Opportunities Across Industries

The versatility of data analytics allows professionals to work across industries—and Dehradun is no exception. Local startups in health-tech, ed-tech, and tourism are now hiring analysts to help them understand user behavior, optimize services, and predict trends. Even government departments and NGOs are increasingly using data-driven insights for planning and public services.

By undertaking a well-rounded data analyst offline course, learners can position themselves to tap into these local opportunities. Offline courses offer the advantage of face-to-face mentoring, real-time doubt clearing, and peer collaboration—all of which add significant value to your learning experience.

Cost-Effective Learning and Living

One of Dehradun’s most overlooked advantages is affordability. Compared to major metros, the cost of living and education in Dehradun is far more reasonable. This allows students and early-career professionals to focus on learning without the burden of financial strain. You can gain the same industry-relevant skills that are taught in larger cities—often at a fraction of the cost.

Whether you choose to study full-time or alongside a job, a data analyst course in Dehradun offers a return on investment that’s both financially and professionally rewarding.

Why DataMites Institute Is the Ideal Institute for Analyst Aspirants

For those seeking a trusted and comprehensive learning experience, DataMites stands out as a leading institute for data analytics training. Its programs are designed not only to cover theoretical concepts but also to provide hands-on experience with real-world data problems, equipping learners with the confidence and skills to step into the job market.

The courses offered by DataMites Institute are accredited by IABAC and NASSCOM FutureSkills, ensuring alignment with international industry standards. Students benefit from expert mentorship, real-world project experience, internship opportunities, and robust placement support.

DataMites Institute also offers offline classroom training in key cities such as Mumbai, Pune, Hyderabad, Chennai, Delhi, Coimbatore, and Ahmedabad—ensuring flexible learning options across India. For those in Pune, DataMites Institute offers an excellent platform to master Python and thrive in today’s competitive technology landscape.

In Dehradun, DataMites Institute offers the same quality of education through structured modules, practical labs, and dedicated mentor support. Their training not only covers core analytics tools but also includes project-based learning that prepares students for real industry scenarios. For anyone looking to join a data analyst offline course that leads to real career growth, DataMites Institute is a choice backed by results, reviews, and industry alignment.

Dehradun is more than just a quiet town with beautiful hills—it is evolving into a city where modern careers like data analytics can flourish. With the right mix of learning opportunities, local industry demand, and a nurturing environment, it offers a clear path for ambitious professionals. And with institutions like DataMites guiding the way, aspiring analysts can turn potential into achievement—right here in the heart of Uttarakhand.

0 notes